Recently, AI-based applications have become popular and modernized our interactions with technology. The industry is moving from virtual personalized assistance to intelligent recommendations to gain a competitive edge. So, evaluating the performance and reliability of AI systems is crucial to ensure that they deliver high-quality user experiences, meet industry regulations, and optimize resource utilization.

Unlike traditional software, the performance of AI applications needs to be evaluated not only from processing power or responsiveness perspectives. Instead, AI algorithms and data processing models must focus on and be perfectly tuned to meet specific performance requirements.

What's Different About the Performance Testing of AI-based Applications?

Before diving into the performance testing of AI-based applications, it’s essential to understand how they differ from traditional applications. The difference is primarily due to their integration of AI models like Generative AI, Computer Vision, NLP, Machine Learning Model, etc., running as backend components.

From a mobile platform perspective, performance testing of AI apps requires real-time responsiveness and maintenance under varying loads, especially for applications like augmented reality or image processing. Each AI model needs evaluation from a performance perspective with a new dataset. So, performance testing of AI-based applications is different in terms of methodology, capabilities, and the level of automation.

Below are a few areas that make the performance of AI applications different:

- The quality, quantity, and nature of training datasets influence performance.

- AI algorithm processing and output take up inference time.

- Presence of feedback loop functions that adjust and improve based on feedback data received.

- It involves analyzing historical data and generating synthetic user behaviors, allowing for a more realistic simulation of user interactions.

QASource’s Approach for Performance Testing of AI Apps

Establishing a standard for testing AI-powered features in the application is not straightforward because tests depend not only on the application software but also on the domain, dataset used, and AI features used in the application.

Here, performance testing aims to determine AI features' response time, failure point, and processing capabilities. So, QASource’s approach to preparing a test plan for performance testing in AI would be concentric on standard tests:

- Stress Test: To know the failure point and benchmarks

- Data Drift Test: To evaluate the model's performance when input data distribution changes over time

- Reliability Test: To assess model recovery time by simulating system failures and interruptions

- Volume Test: To inject massive test datasets to know AI algorithm capacity

- Adaptive Learning Testing: To monitor the performance during re-training for the model by changing the dataset complexity

Let's take an example of an application with AI-powered features like a Generative AI-powered ChatBot, image tagging and facial recognition, and user behaviour analysis. Here, the ideal approach would be to evaluate the performance of business workflows from a user perspective and the performance of the application's AI components.

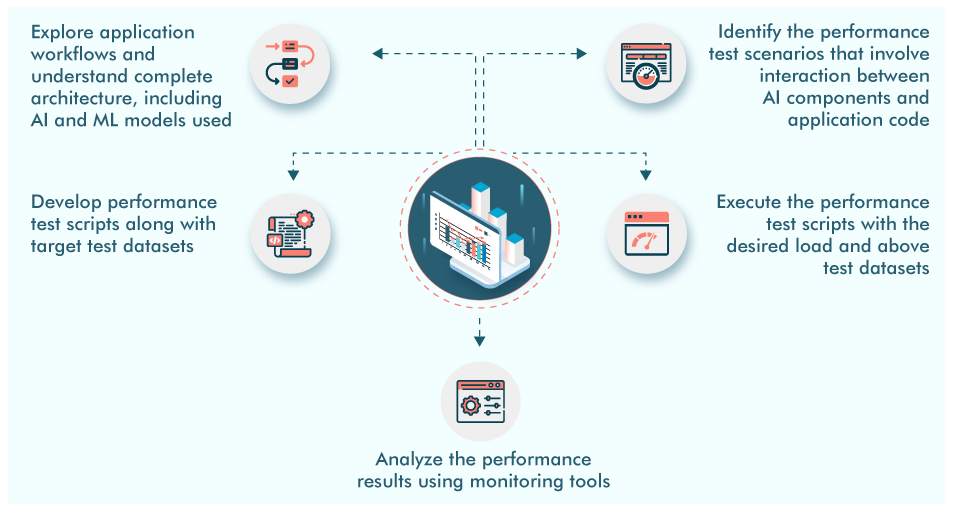

Steps for Performance Testing of AI Apps

To troubleshoot performance bottlenecks, utilize application performance monitoring tools, which now come with AI capabilities, and recommend optimized fixes.

So, it’s essential to monitor the application's performance in AI, which can give insights like:

- Access for dataflow between backend AI components

- Data transactions between AI components and application code

- Visibility of all components of the AI stack alongside services and infrastructure

- Tracing and analyzing the volume of data generated by the AI tech stack

KPIs to be Measured

Below are significant KPIs that need to be measured while executing performance tests of AI apps:

-

Model Inference Time

Process the new data and generate output for pre-trained AI/ML models.

-

Throughput

Data input and output operations or inference calls during model inference.

-

Failure Rate

Errors, timeouts, or crashes during AI-generated data processing.

-

Inference Latency

Delay between sending input data to the AI model and receiving predictions.

-

Response Time

Variability for AI/ML components.

-

AI Algorithms Concurrency

Processing large datasets, training complex models, and handling multiple inference requests.

-

Resource Utilization

Tracking matrices like CPU and memory usage may indicate AI computational intensity.

Conclusion

An effective performance testing of AI applications is essential to guarantee smooth operation in diverse environments. Testers can identify and address potential performance bottlenecks by rigorously evaluating inference time, resource usage, and scalability. QASource team with good experience in performance testing, follows the approach that validates the reliability of AI systems. Continuous iteration, documentation, and collaboration with development teams are vital components of a successful performance-testing strategy for AI applications. However, this contributes to their overall efficiency and responsiveness, ensuring they meet user expectations under varying conditions.

Have Suggestions?

We would love to hear your feedback, questions, comments, and suggestions. This will help us to be better and more useful next time.

Share your thoughts and ideas at knowledgecenter@qasource.com