What Is Llama 2?

Llama 2 is a large language model (LLM) notable for its advanced capabilities and open-source availability. It is adept at handling various text-based tasks, such as language translation, content creation, and informal question-answering, making it a highly versatile tool.

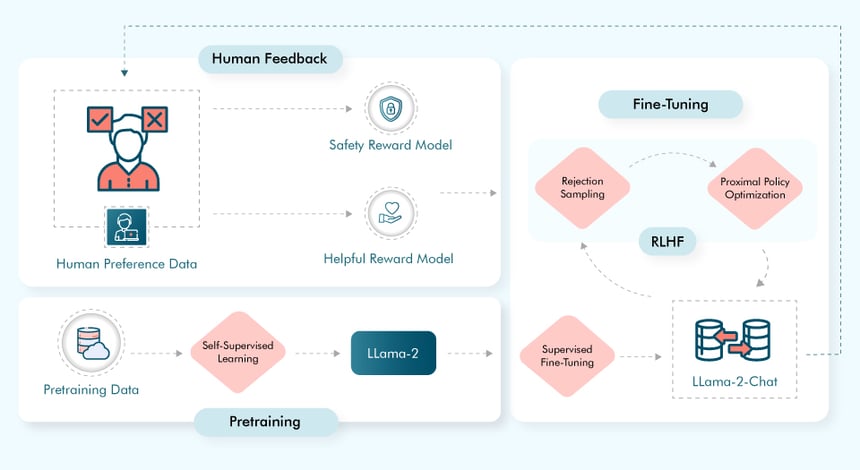

Compared to Llama 1, Llama 2 was trained on 40% more data and offers double the context length, allowing for a more comprehensive understanding and response. The model's development involved a pre-training phase based on various publicly available online data sources. Over 1 million human annotations inform the fine-tuned version of Llama-2-chat, which also leverages reinforcement learning from human feedback (RLHF) to improve safety and usefulness.

Below is the high-level workflow for working with the Llama 2 chat model.

Features of Llama-2

- Enhanced Performance: Llama 2 excels in reasoning, problem-solving, and factual accuracy, as evidenced by various benchmarks.

- Improved Code Generation: The model demonstrates reliability in generating correct and efficient code.

- Greater Contextual Understanding: Capable of processing up to 4096 tokens, Llama 2 effectively comprehends and responds to complex queries.

-

Increased Versatility

- Multiple Model Sizes: Available in 7, 13, and 70 billion parameter models to suit diverse needs and computational resources.

- Fine-tuned for Conversation: The model offers engaging and informative dialogue thanks to extensive human-annotated data.

- Open-source and Accessible: Llama 2's open-source nature encourages community-driven development and innovation.

-

Additional Highlights

- Massive Training Dataset: The model's training involved 2 trillion text tokens, contributing to its extensive knowledge base.

- Responsible Use Guidance: Meta provides guidelines to ensure ethical and transparent AI development.

- Active Community Support: Users can benefit from ongoing research and community contributions.

How To Use Llama 2 in Python

We need to execute a few more steps to use the Llama 2 models in Python.

-

Create a symbolic link for the downloaded chat model.

Eg: ln -s ./tokenizer.model ./llama-2-7b-chat/tokenizer.model

-

Now convert the Llama-2 models into hugging faces:

Execute the commands in the current directory.

TRANSFORM=`python3 -c "import transformers;print('/'.join(transformers.__file__.split('/')[:-1])+'/models/llama/convert_llama_weights_to_hf.py')"` - After that, the final command is to create a hugging face.

pip install protobuf && python3 $TRANSFORM --input_dir ./llama-2-7b-chat --model_size 7B --output_dir ./llama-2-7b-chat-hf

- Once the above command is completed, we can find the Llama-2 models in the directory llama-2-7b-chat-hf as hugging faces.

After these steps, you can use the downloaded Llama 2 models in your Python programs for various AI-driven tasks.

Conclusion

Llama 2's integration into Python opens up a world of AI and software development possibilities. For those looking to implement or test such advanced AI models in their projects, QASource offers expertise in ensuring smooth integration and optimal performance, aligning with the latest AI technology and software testing standards.

Post a Comment