Big data is used by various applications for analysis, predictions, and decision-making. Enormous growth, efficiency, and performance of big data applications can make a huge impact in our daily lives.

Big Data And Hadoop Performance Testing

There is a growing need for performance testing of big data applications in order to ensure that the components involved provide efficient storage, processing and retrieval capabilities for large data sets.

In this newsletter, we will focus on Apache Hadoop as one of the most widely used big data frameworks and its various components from a performance testing standpoint.

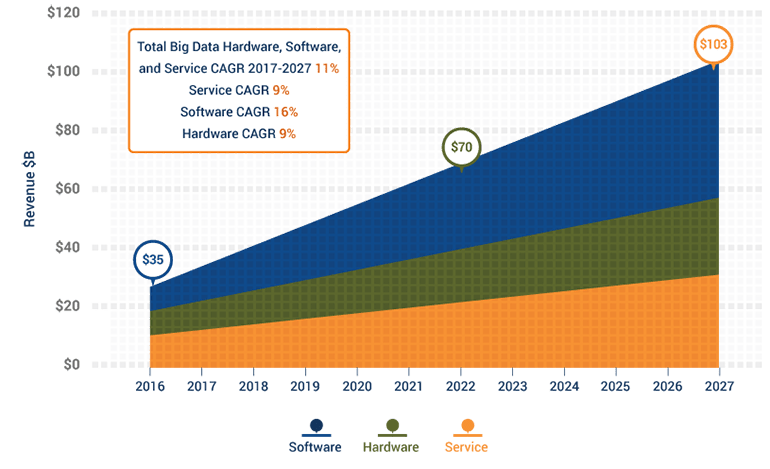

Worldwide Big Data Hardware, Software, and Services Revenue in $B (2016-2027)

According to Wikibon, the big data analytics market grew by 24.5%, as a result of improved public cloud deployments and advancements in tools.

Hadoop Core Components

Why Is Performance Testing Needed For Big Data Applications?

High CPU Utilization

Excessive data compression and decompression utilizes more CPU cycles and can lead to high CPU usage.

High Disk Usage

This mainly occurs during multiple data spilling when the circular memory buffer is completely occupied during map output.

Low Memory

Data swapping due to low memory leads to performance degradation of Hadoop.

High Network Utilization

Large input or output of MapReduce jobs can lead to high network usage. High data replication factor is another cause of high network utilization.

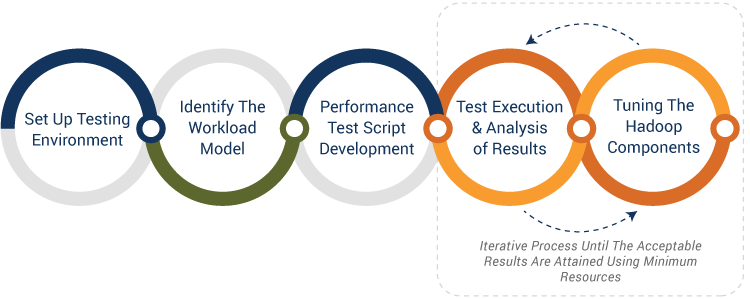

Performance Testing Approach

The performance testing approach for big data applications is quite different in terms of test environment setup, test data, test scenarios, monitoring, and performance tuning.

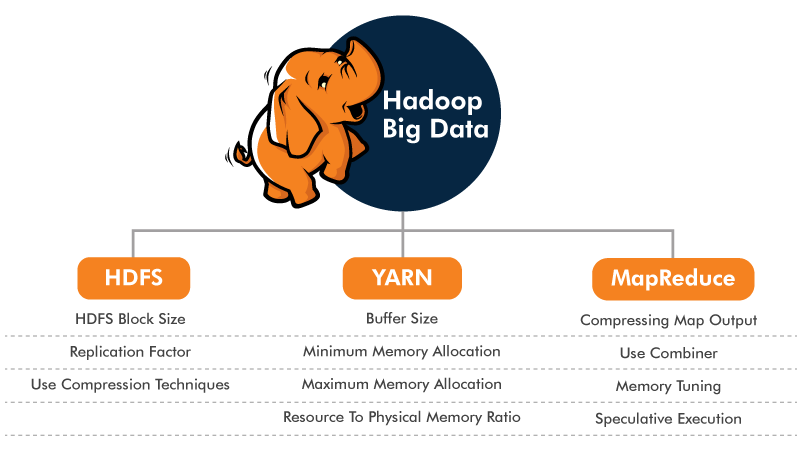

Performance Tuning Of Hadoop Component

For optimal performance, it's essential to tune various components of the Hadoop ecosystem. Hadoop components work together to store and process the big data. Tuning is necessary because it has large and diverse data involved which needs to be handled differently. Every component should be optimized and monitored for better performance of the Hadoop ecosystem.

The configuration properties of each core component listed above need to be tuned in an optimized way to get better optimal performance of Hadoop code

Hadoop Load Testing And Monitoring Tools

Load Testing Tools

JMeter (Open Source)

-

Provides various performance testing plugins such as HDFS Operations Sampler and HBase Scan Sampler

-

jMeter Hadoop set plugins are used to test the Hadoop ecosystem. Common examples are:

- HDFS Operations: Copy files to the HDFS storage

- HBase CRUD: (Create/Update/Read/Delete) operation over HBase

- HBase Scan: Retrieve single/multiple records

- Hadoop Job Tracker: Used to get job or task counters and stats read records, processed records, progress of a job by id, and group names

SandStorm (Commercial)

- Provides clients for Cassandra, Mongo, Hadoop, Kafka, RabbitMQ etc.

- Resource monitoring for big data clusters

- NoSQL benchmark utility

Application Monitoring Tools

Key Takeaways For Hadoop Performance Testers

- Performance testing engineers need to have good knowledge of Hadoop components and big data application architecture

- Each Hadoop component needs to be tested separately and in integration

- Knowledge of scripting language is needed to design the performance test scenarios

- Testers need to execute the performance test with a variety of data sets

- Monitoring of CPU and memory would be required for each Hadoop component

Have Suggestions?

We would love to hear your feedback, questions, comments and suggestions. This will help us to make us better and more useful next time.

Share your thoughts and ideas at knowledgecenter@qasource.com