In order to test ML-based systems, metamorphic testing is used to describe the system functionality in terms of generic relations between inputs, the generic transformations of those inputs, and their outputs.

Testing machine learning based applications or artificial intelligence systems requires a different approach compared to testing traditional software systems. This is because traditional software systems are rule-based and are collections of logical units, while AI systems are essentially non-deterministic in nature. The end result or outputs of an AI model are probabilistic, therefore output and responses of an AI model for a given input can change over time. Therefore, the traditional approach of creating test cases by mapping inputs to specific outputs or expected results does not work for AI testing.

In AI, an "oracle" is a technique by which testers can determine whether the output of the program under test is accurate. However, in some cases, the oracle is inaccessible or too complex to use. The "Oracle Problem" is what is referred to as here. In other circumstances, the human tester who manually examines the testing results serves as the oracle. The efficiency and expense of testing are both significantly reduced by the manual prediction and verification of program output. To test programs without using an oracle, a metamorphic testing method has been proposed.

What is Metamorphic Testing?

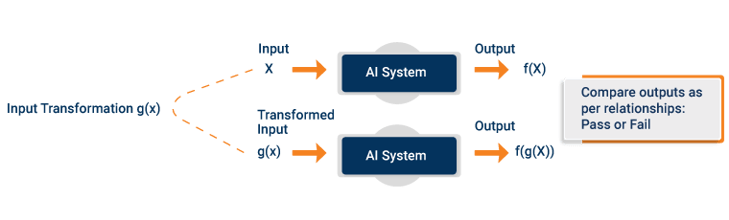

“Metamorphic testing” is one solution which can address such challenges of testing ML-based systems. In metamorphic testing, unlike traditional test cases where we map specific inputs to specific outputs, the inputs and outputs are tested in accordance to a generic relationship.

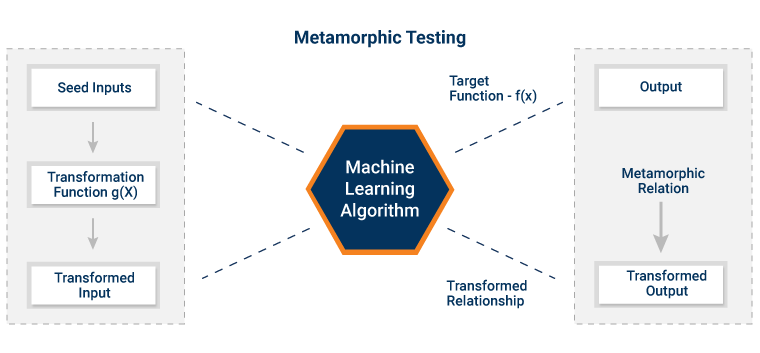

Defining the application functionality in terms of a generic relationship based on the target function or ML model applied in the system is the core idea behind metamorphic testing.

It enables us to evaluate the behaviour of ML/AI models on different transformed inputs representing real world scenarios.

Some Key Terms in Metamorphic Testing

Metamorphic Relation

It defines the relationship between morphed input and its output and is derived from the target function applied in the ML model. AI QA teams need to study the target function in order to gauge its effect on the output for morphed input.

Transformed/ Morphed Input

In order to evaluate the behaviour of ML models for real world scenarios, we need to transform the seed inputs accordingly and verify that the output generated is in accordance with the metamorphic relationship.

Seed Inputs

These are the input data points that are used in initial test datasets for model testing and then are transformed for performing metamorphic testing.

The Metamorphic Test

Let’s say that f(x) is the target function used in the ML algorithm, an AI QA engineer evaluates this target function and drives a metamorphic relationship between the inputs and outputs. Additionally, a suitable transformation g(x) will be applied to the input in accordance with the test requirements, and the associated output f(g(x)) will be assessed in accordance with the metamorphic relationship.

Some examples of metamorphic relations are as follows:

- Affine Transformation: This metamorphic relationship would be applied to verify that any transformation on the input data which does not change the pattern, does not have any impact on the output. For example, on applying transformation g(x) = Ax + B, (A ≠ 0) on the input features of the test dataset, there would be no impact on the output.

- Permutation of the Attributes: This metamorphic relationship would be applied to verify that any transformation on the input data that permutes input attributes, should not change the end result.

- Addition of Uninformative Attributes: While testing a classifier, this metamorphic relationship would be applied to verify that on adding any uninformative attribute which is equally associated with all the prediction classes, should not change the prediction class label.

Types of Metamorphic Relations

There are numerous types of metamorphic relation. Here's a comprehensive look at the most frequent types of relation types:

-

Positive Metamorphic Relation: This would imply that any increase in certain variables in input data would increase the probability of a prediction class. For example: while evaluating credit risk, an increase in annual income or investments may result in an increase in probability of loan repayment.

-

Permutation of The Attributes: This would imply that any decrease in certain variables in input data would decrease the probability of a prediction class. For example, while evaluating credit risk, increasing current debt amounts or liabilities may result in an increase in probability of credit default.

-

Invariance Between Transformed Input and Output: This means that output of the AI model would not be impacted by transformation of the input data.

Execution in Metamorphic Testing

Execution with cross-validation is performed to increase the efficiency without the interference of Oracle in metamorphic testing. Cross-validation is generally applied while testing AI models performance comparing actual and predicted results. Using metamorphic testing with cross-validation is one of the best approaches to perform thorough testing of models as input transformations, since evaluation based on metamorphic relation along with wide coverage provided by cross-validation helps in capturing the maximum number of defects.

Conclusion

Metamorphic testing provides an approach for testing the AI/ML systems using metamorphic relations. These metamorphic relations help the AI QA engineer to verify ML model’s output after applying a certain transformation on the input. The main advantage of metamorphic testing is that it can act as a test oracle, helping the AI QA team to generate test cases beyond available test datasets, which is otherwise not possible. It also helps in testing AI systems with test data generated without ground truth labels as the metamorphic relations are used to evaluate the relationship between input and output instead of ground truth. To know more about mobile application testing, contact QASource now.

Have Suggestions?

We would love to hear your feedback, questions, comments and suggestions. This will help us to make us better and more useful next time.

Share your thoughts and ideas at knowledgecenter@qasource.com