Artificial neural networks are programs inspired by the human brain. A variety of industries are now utilizing them, but a few leading the adoption of ANN include the manufacturing, security, and healthcare industries.

Artificial neural networks, also referred to as ANN, are programs inspired by the human brain and enable deep learning applications to learn from large unstructured datasets (in the form of text, images, and videos). A variety of industries are now utilizing artificial neural networks, but a few leading the adoption of ANN include the manufacturing, security, and healthcare industries.

ANN Application Trend in Diverse Sectors

Source: ScienceDirect

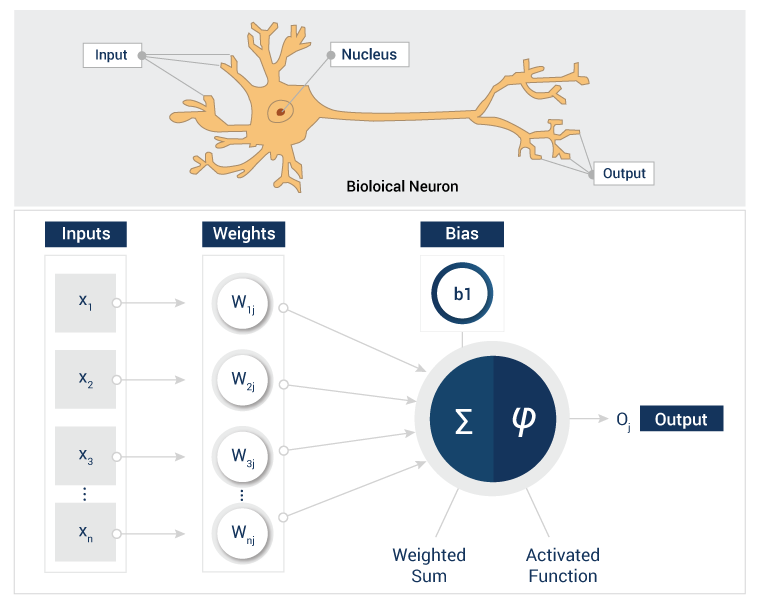

The fundamental component of a neural network is an 'artificial neuron', just like the biological neurons in a human brain. Simply put, an artificial neuron takes different inputs, processes data, and passes the output to other neurons in the network.

Components of an Artificial Neuron

Weights

A weight is assigned to each feature at the input layer. These weights are then optimized to minimize the loss function. Scalar multiplications of weights and their weighted sum is provided as an input to the activation function in a neuron.

Bias

Optimizing the weights only affects the steepness of the sigmoid, so in order to shift or move the curve, ‘bias’ values are required. Bias values shift the activation function, which is critical for successfully training the deep learning model.

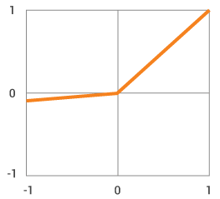

Activation Function

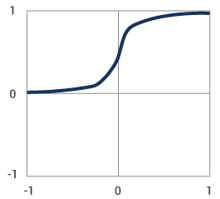

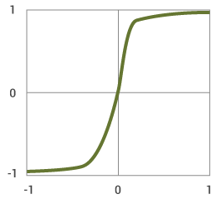

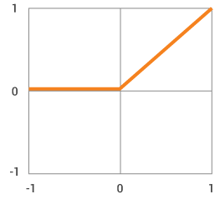

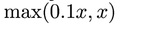

Whether a neuron fires or not depends on the output of the activation function and the weighted sum of inputs along with bias added to it. Activation function introduces non-linearity into the output of a neuron. There are various activation functions like Sigmoid, ReLU, Tanh, and Leaky ReLU.

| Activation Function | Function | Plot |

|---|---|---|

|

Sigmoid

|

|

|

|

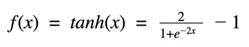

Tanh

|

|

|

|

ReLU

|

|

|

|

Leaky ReLU

|

|

|

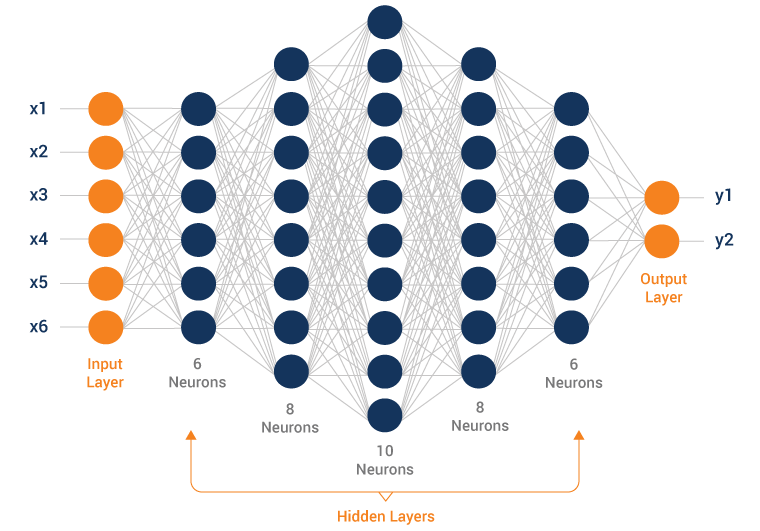

Elements of a Deep Neural Network

-

1. Input Layer

Real-world data is fed to the neural network for learning at the input layer. While no computation is performed here the data continues to be provided to the hidden layers.

-

2. Hidden Layer

Each computation and optimization of weights and biases from forward pass and backpropagation algorithms are performed on hidden layers. Different variants of the gradient descent algorithms are used to optimize the weights w.r.t the errors. There can be multiple hidden layers with different numbers of neurons at each layer which further transfer the results to the output layer.

-

3. Output Layer

This layer provides the predications made by the model. Loss is calculated by comparing the model’s predictions with the expected results. This loss is minimized using gradient descent algorithms.

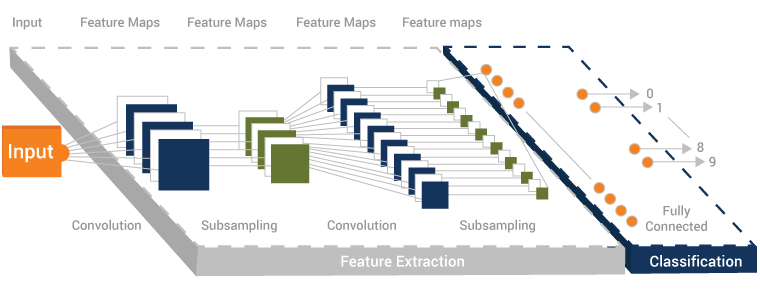

Computer Vision

Computer vision provides computers with the ability to visualize, identify, and process objects in images and videos. Computer vision is used for object identification, medical image analysis, event detection, video tracking, motion estimation, etc. The most commonly used algorithms for computer vision are variants of Convolutional Neural Network (CNN).

Convolutional layer is the core of a CNN. This layer has different learning parameters like filters/kernels, Padding, Stride, and Channels. These parameters enable teams to process, analyze, extract important features from image data and train the deep learning model.

Learning Parameters

- Kernel/Filter Size: A matrix of weights used to convolve the input.

- Padding: After convolution, the purpose of padding is to preserve the original size of the image by adding columns and rows of zeroes symmetrically. This process also helps retain the information at the borders.

- Stride: The number of pixels shifted over while traversing the input horizontally and vertically during convolution per slide.

Key Takeaways

- Performance of neural network will depend on the quality of training data and its architecture.

- It is important to add bias to the network so that curve can be shifted for a better fit.

QASource's Deep Learning Expertise

Our team at QASource has extensive experience in building and testing deep learning systems and custom neural networks using CNN architectures like ResNet, AlexNet, and OneNet. We use various deep learning development libraries like TensorFlow, PyTorch, and Theano Keras. Our engineering teams have experience in developing and testing deep neural networks for different applications like computer vision, object detection, and object identification.

Have Suggestions?

We would love to hear your feedback, questions, comments and suggestions. This will help us to make us better and more useful next time.

Share your thoughts and ideas at knowledgecenter@qasource.com